My name is Aris Xenofontos and I am an investor at Seaya Ventures. This is the market update version of the Artificial Investor that covers the top AI developments of the previous month.

2 billion dollars for senior OpenAI alumni, a 3-billion-dollar AI acquisition that proves that product execution and UX matter, the convergence of AI, Search and Social, growing corporate AI adoption, the “AI trade War” rollercoaster, the new state-of-the-art models launched by the “AI Big 3”, and the new agent application that hit 10 million dollar revenues in 9 days.

All this and much more in our AI market update for April 2025.

🚀 Jumping on the bandwagon

Last month’s largest rounds highlight investor appetite for senior OpenAI alumni (ex-CTO Mira Murati’s Thinking Machines raises 2 billion dollars, while Google and Nvidia invest in Safe Superintelligence, ex-CSO Ilya Sutskever’s startup), drug discovery (600 million dollars for Isomorphic Labs), robotics (500 million dollars for Agility Robotics and Nuro) and audio/ video generation (500 million dollars for Runway and Sesame AI).

In terms of exits, the biggest news is coming from OpenAI and its potential acquisition of Windsurf, an AI coding application, for a rumoured 3 billion dollars. This is a very interesting acquisition as it indicates the value in verticalised application and the product execution’s ability to create a moat. Stay tuned as we will publish a specific Artificial Investor issue about this topic once the acquisition is closed. Infinite Reality made another acquisition (after Napster), this time for 500 million dollars for Touchcast, a Gen AI platform that allows users to create interactive videos and Web experiences. Other AI acquisitions with undisclosed amounts include Humach/Markets EQ (AI voice analytics), Splice/ Spitfire Audio (sound generation) and Hugging Face/ Pollen Robotics (open-source humanoid robots).

📈 On pink paper

♟️ The GenAI chess game continues

The chess game in Gen AI is ongoing. Despite billions of dollars invested in the infrastructure layer, infrastructure players seem eager to make money at the application layer. OpenAI is an undisputed leader in the AI chatbot race, having experienced 30% revenue growth in just 3 months, which is impressive considering the company's size. ChatGPT boasts 500 million weekly active users, estimated to be equivalent to at least 100 million daily active users, which is 10x more users than the number two, DeepSeek, which has reached 16 million active users. Gemini follows with about 11 million daily active users at number three. Microsoft's Co-pilot and Claude trail far behind with 2.5 million daily active users.

But that’s not enough. OpenAI is aiming to reach 120 billion dollars in revenues by 2029. Currently, they make $15 per user, so their existing business and revenue model means they would require more than the entire population of our planet to hit this target. To expand revenue per user, the Silicon Valley company launched an AI shopping experience, where one-click purchases can be made from AI Web search results. There have also been leaks suggesting they are planning to launch a social AI app, allowing users to share AI chat histories or have joint AI chats with others, as well as view the AI chat feed of their contacts.

An element of convergence is also evident. Google, the king of Search, launched its AI Mode in Search, providing a summary at the top of the results, bridging the gap between traditional Google Search and OpenAI's Web search. Convergence is also seen from social apps, as Meta announced (in its first Gen AI developer conference) that it is launching a standalone AI chatbot app with social network functionality and a Llama API (which will compete directly with OpenAI's API and Google's Gemini API).

In summary, the battle is on, and the race is heating up. Players are desperately seeking to expand their product suites and functionalities to generate more revenue per user, focusing on the application layer and convergence between social, search, and AI.

📃 Data, data, data

The 2025 edition of the Stanford AI Index report was published last month. A few of the highlights are: 1) AI gets democratised, as models get smaller (ubiquity) and cheaper…

…however, complex reasoning and tasks remain a challenge, as they continue to score low in the PlanBench test (playing Blocks World) and get significantly outperformed by humans when solving tasks that take more than a day.

2) AI impact remains mixed, as it is revolutionizing healthcare (200+ FDA-approved AI medical devices) while causing an equal number of harm incidents.

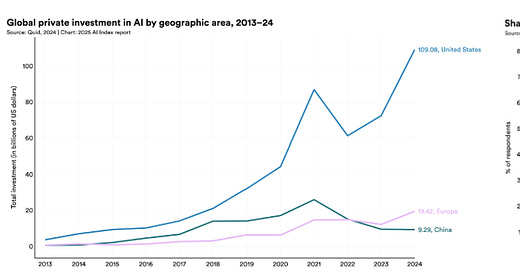

3) US leads private investment in AI reaching 100 billion dollars in 2024, leading to GenAI nearly catching up on Enterprise adoption with more traditional AI

In other market news

Cursor grows from 100 million dollars to 300 million dollars ARR within few months

Scale AI anticipates 2 billion dollars of revenue, in a signal of the sustained strong growth of the AI market

Gartner Forecasts Worldwide GenAI Spending to Reach $644 Billion in 2025

US survey shows that the public and experts are far apart in their enthusiasm and predictions for AI

⚔️ A double-edged sword

🥊 US/China AI war escalates with Liberation Day

Nvidia said it will take a 5.5 billion-dollar financial hit after Washington placed fresh restrictions on the export of its H20 AI chips to China, sending the stock about 7% down on the day. A few days later, Elon Musk warned that a rare earth magnet shortage, caused by China’s announcement of restrictions around the export of rare earth elements (REE) to the US, would delay the launch of Tesla’s humanoid robots. This came only a few days after it was reported that Apple chartered cargo flights to transfer 1.5 million iPhones from India to the US, after it stepped up production there in an effort to navigate US’s import tariffs on China. Stock markets reacted negatively to the trade war news, with S&P 500 down 14% on the US import tariff announcement, but recovering back to a 2.5% decline vs. the pre-tariff announcement levels within three weeks.

We wrote an extensive piece on the topic in Issue 50: What AI tells us about the future of the US/China trade war. We analysed the AI supply chain and concluded that it is geographically concentrated. The US contributes crucial design, R&D and some high-tech manufacturing (like fab equipment and some chips), whereas China (and East Asia broadly) contributes raw materials refining, the bulk of manufacturing capacity, and low-cost assembly. Taiwan and South Korea are indispensable for advanced semiconductors, while China is indispensable for materials and manufacturing scale. Our layer-by-layer US/China battle led to China’s victory with a score of 5-3. And it’s not as simple as that. The American dependence on China in key areas, such as smartphones, computers and batteries, is very high, given that 75%+ of supplies come from the Red Dragon.

As a result, we believe that China has more leverage over its American peer. As the US continues with its nationalistic and trade war tactics, we expect further escalation to take place. For now, China has been relatively gentle by doing the obvious, but it could introduce export tariffs for REEs or even ban their export to the US and its close allies.

Unfortunately, AI innovation is being impacted negatively both on the supply (hardware supply chain disruptions) and demand side (financial disruptions of corporates who buy AI technology; macro uncertainty reduces funding appetite for investors). Our prediction is that a deal will be probably made before the end of the year (which is in line with one of our 2025 AI predictions). Some companies will announce investments in the US, a couple of domestic manufacturers will onshore (just like Nvidia’s recent announcement to build and test Nvidia Blackwell chips in Arizona and Texas), another couple will “friendshore” and some foreign producers will announce US factory construction projects. Nevertheless, taking into account the construction lifecycle of many stages of the AI supply chain, it will take 5-10 years before a meaningful change in the power dynamics of the AI supply chain.

🔦 Every day we know more

Another report shed some more light on how the software engineer job is changing. This is the second edition of one of our favourite reports, given that it’s created bottom-up by analysing real actions taken by users paying for AI to use it to solve problems in their daily work. The Anthropic Economic Index analysed 500,000 interactions of software developers with Claude. The results showed that 1) the vast majority (80%) of users used AI for automation (as opposed to augmentation), 2) the most common use case is Web-development languages (JavaScript, HTML) - which means that front-end development is being disrupted first and back-end development remains “protected” for now, and 3) that startups are the main early adopters in the business world with 3x higher adoption levels than large companies (75% of business conversations vs. 25% for Enterprise).

In other risk-related news:

EU unveils biggest AI strategy yet, including simplifying the AI Act. This demonstrates the change in sentiment in the region and the shift from AI Safety to AI Competitiveness

By 2030, data centers will require slightly more energy than Japan consumes today. Demand for AI-optimized facilities alone set to quadruple, IEA predicts.

🧩 Laying the groundwork

🔢 Models

📈 The AI Big 3 launch new state-of-the-art models

Meta, OpenAI and Google all launched SOTA models in April. The model launches demonstrate the current GenAI trends:

Cost effectiveness to enable mass adoption

Long context window to expand the use cases

Optimised for coding to support the use case with the most demand

Native multimodality that helps unlock advanced audiovisual use cases

Specifically, Meta launched Llama 4, which incorporates:

Mixture-of-Experts architecture that is composed of “expert” sub-networks and a “gating” network that dynamically routes inputs to the appropriate experts. This allows for conditional computation, making large networks more efficient.

Native multimodality achieved by joint pre-training on massive text/image/video datasets, which leads to better performance in multimodal prompts.

Ultra-long context that extends to 10 million tokens (5x longer than the previous leader, Gemini Pro) for the Llama Scout version, which expands the potential scope of information a model can access in one go. Nevertheless, answering questions that combine content across the context window are still not performing well.

OpenAI launched five new models grouped in two families:

The general-purpose GPT-4.1, GPT-4.1 mini, and GPT-4.1 nano. GPT-4.1 scored higher than GPT-4o in the benchmarks, with a strong improvement in coding and a longer context window (1 million tokens).

The reasoning models o3 and o4-mini. The effort of the models is configurable that allows controlling the tradeoff of reasoning performance vs. cost. Also, the models decide by themselves when and how to use tools, such as Web search, image editing and coding.

Google launched Gemini 2.5 Pro (Preview edition), a new version of its Gemini Pro series with better coding capabilities. The model powers Google’s new AI coding tool, Firebase Studio, and leads the Web automation benchmarks.

Other model-related news include:

Google integrates its video-generation model Veo 2 into Gemini API

Dream 7B is a new open-source Large Diffusion Language Model

Tavus launched Hummingbird-0, a Lip Sync model with photorealistic zero-shot accuracy

🕵️ Agents

Another month, another set of significant milestones in the agentic world.

To begin with, at the Application Layer, we had another emerging startup generating buzz, reaching 10 million dollars of annualised revenues within 9 days! This time the company is called GenSpark. They launched a Web-focused agent that is capable of looking up and collecting information from the Internet, doing fact-checking on its results at a separate step, and then putting it all together and in slides or sheets. It can also take actions like sending emails and making phone calls. Some example use cases include:

converting a five-hour YouTube video into 10 slides

creating a minute-long South Park episode about recent news, or

analysing U.S. earthquake data to generate a detailed report with tables and charts

From the incumbent side, Google launched Firebase Studio, which competes directly with Bolt, Lovable, V0, and all of the other prototyping tools. This is a great example of an incumbent coming a little bit late to the game, perhaps a year late, but leveraging on a significant product advantage that it has, and possibly also distribution advantage. So its product advantage is obviously Firebase itself, which is a chargeable Backend as a Service application. It's similar to Superbase, the open-source backend module for the two main competitors, Bolt and Lovable. Firebase, like Superbase, gives you an easy creation of a database to store data, user authentication, and other backend features. Google is also leveraging its Gemini models optimised for coding, as well as its existing online coding studio environment. Initial feedback is very positive, and users feel it's comparable to Bolt. It's very easy to use to get started with it for newcomers, but at the same time, some users have complained a bit about performance.

At the Infrastructure Layer, we have news again from Google: the launch of A2A (Agent-to-agent), a framework that enables agents to communicate and collaborate with each other in a secure way. The idea is that it works across ecosystems, being agnostic to the underlying framework used to create each agent and the different vendors.

🧇 Chips

🥊 Pressure on Nvidia continues

The pressure on NVIDIA continues, both on the DIY side and on the head-to-head competition side.

First, Google launched a new AI chip, Ironwood, the 7th generation of its TPU, and that is the first TPU that is optimised for inference. It has 2x better performance per watt, 6x times higher capacity, and 4.5x times faster data access versus its previous generation of chips (Trillium). This new chip generation is linked to two recent AI trends we've been tracking: 1) shift from training towards implementation at scale where inference is needed, and 2) stronger demand for reasoning models and AI agents that also require inference compute.

Second, Huawei is preparing a launch of its new A I chip, Ascend 910D. As one would expect, there's a growing demand for Huawei chips due to the US export restrictions. One one hand, Huawei's chips perform worse than NVIDIA's H100 (which is actually not even NVIDIA’s state-of-the-art chip; they are about to launch a new chip series, Blackwell) due to the challenges faced in accessing key components and advanced chip-making equipment. On the other hand, it looks like Huawei has excelled in the field of combining chips together into a rack in order to implement an intelligent supercomputer at scale. Building efficient systems that get AI chips to work together is not a trivial task, and Huawei has introduced a rack system called CloudMetrix 384 that allegedly performs better than the one of NVIDIA. This is potentially a very interesting turn of events in the race for AI chip superiority that could turn upside down the US/China AI war dynamics.

See you next time for more AI insights.

Great roundup