✍️ The prologue

My name is Aris Xenofontos and I am an investor at Seaya Ventures. This is the weekly version of the Artificial Investor that covers the top AI developments of the last seven days.

This Week’s Story: Carnegie Mellon researchers publish study about AI’s impact on critical thinking

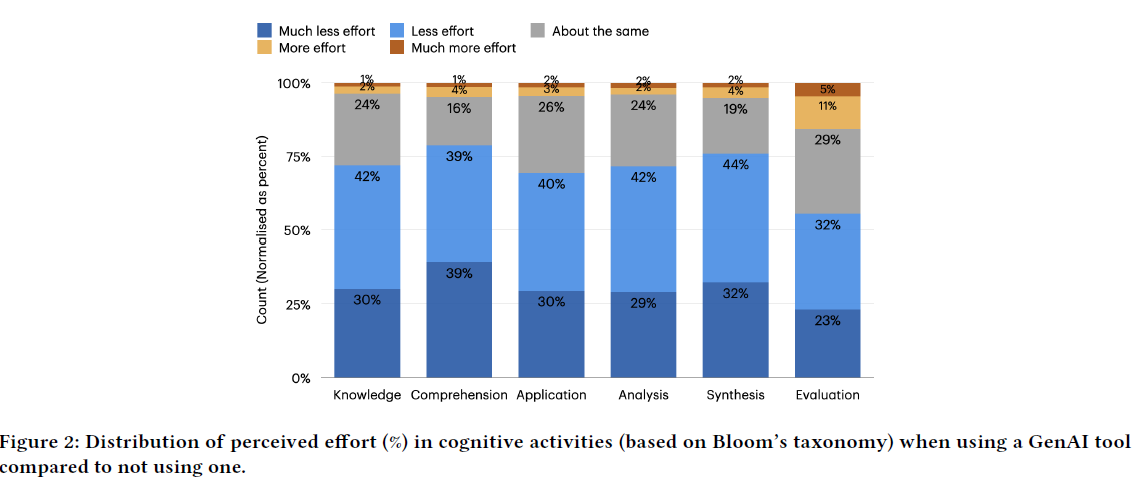

A few days ago, researchers at Carnegie Mellon and Microsoft published a study on the impact of GenAI on critical thinking among knowledge workers. The research found that while GenAI reduces cognitive effort, it can also decrease critical thinking. Higher confidence in GenAI correlated with less critical thinking, which occurred more in younger participants.

Is GenAI making us dumber? Or rather do AI’s benefits overweigh the costs? Is this any different from what happened with other technological innovations? What does this mean about the future of Tech?

Let’s dive in.

👥 If everyone is thinking alike, then somebody isn't thinking

The study by Lee et al. surveyed 319 knowledge workers who shared 936 examples of using GenAI in work tasks. The study suggests that GenAI tools can improve efficiency, but may also reduce critical engagement, potentially leading to over-reliance and diminished problem-solving skills. Specifically, key findings include:

Higher confidence in GenAI is linked to less critical thinking

Thinking inhibitors include a lack of awareness (over-reliance on AI), lack of motivation (belief that a task is unimportant) and lack of ability (difficulties in improving GenAI output)

GenAI tools reduce the perceived effort for critical thinking tasks

The use of GenAI results in a shift from task execution to task oversight

For knowledge and comprehension: from information gathering to information verification

For applications: from problem-solving to AI response integration

For analysis: from task execution to task stewardship

Another study published last month by the SBS Swiss Business School investigated the impact of AI tool usage on critical thinking using a set of 666 diverse participants (age, educational background, professional fields) in the UK. The findings showed a significant negative correlation between frequent AI tool usage and critical thinking. Also, younger participants exhibited higher dependence on AI tools and lower critical thinking scores compared to older participants.

This study comes a few months after the research conducted at the University of Toronto about the impact of GenAI on creativity. The research involved two parallel experiments with 1,100 participants, examining how different forms of LLM assistance influence unassisted human creativity. Key findings include:

Exposure to LLM assistance did not enhance originality in tasks, and in some cases, it decreased it and reduced the diversity of ideas

LLMs improved performance of tasks of divergent thinking (connecting the dots between different concepts), but this did not translate into better performance in subsequent similar tasks where participants could not use the LLM

The study also found that participants across the board reported a decline in their perceived creativity after the experiment.

So, recent studies indicate that using GenAI makes us think less critically and less creatively. Does this mean that AI makes us dumber? It’s a bit more complicated than that.

🧠 The extended mind

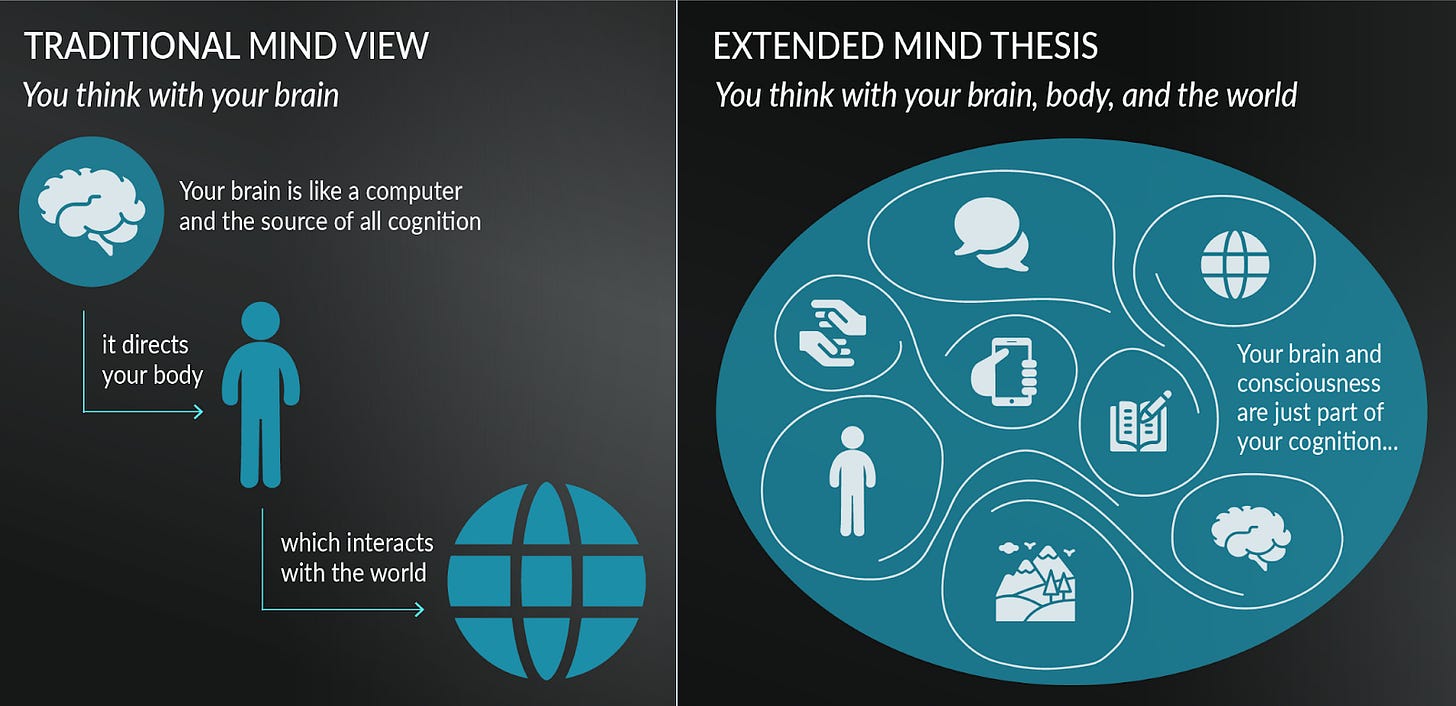

Let’s talk first about the benefits of AI on how our mind thinks, starting with a simple question: “how do we even define our mind?”

In 1998, the philosophers Andy Clark and David Chalmers presented the extended mind theory. According to this theory, tools that humans use to aid cognition can become parts of their mind. They use the example of a notebook as an integral part of a cognitive process for a fictional character, Otto, who has Alzheimer's. Otto uses a notebook to record information he can't retain in his memory. In this case, the notebook acts as a part of the character’s mind, similar to the role of a biological memory. This can be extended to technologies such as the smartphone or LLMs.

Professors from three Italian universities (Università Cattolica Milano, Istituto Auxologico Italiano IRCCS and Università Cattolica Brescia) integrated AI into the the two-system thinking theory, which was originally presented by Daniel Kahneman in the 2011 best-seller “Thinking, Fast and Slow”. In his book, Kahnemann analysed the way we think and broke it down to two systems:

System 1: Fast, automatic, frequent, emotional, unconscious, little/ no brain effort. Used to solve “2 + 2 = ?”, drive a car on an empty road, etc.

System 2: Slow, manual, infrequent, logical, conscious, effortful. Used to direct your attention towards someone at a loud party, park into a tight parking space, etc.

The Italian professors analysed the integration of AI into human thinking as a System 0, which comes before System 1 and 2, manages enormous amounts of data, processes it and provides suggestions or decisions based on complex algorithms. However, unlike intuitive or analytical thinking, System 0 does not assign intrinsic meaning to the information it processes.

Therefore, there is an argument that AI enhances human thinking, rather than hinders it. AI-driven tools enhance certain cognitive functions, such as the ability to process and interpret large volumes of information quickly. This can be particularly valuable in data-driven fields like Finance and Healthcare, where timely and accurate decision-making is crucial. AI can also improve memory, attention, and decision-making by automating routine tasks and freeing up brain resources for more complex processes.

🎒 If we teach today as we taught yesterday, we rob our children of tomorrow

It is no secret that personalised learning is more effective than “one size fits all” education. However, Stanford University professors published a paper discussing the current limitations of personalised learning and recommended the use of LLMs in a hybrid model that blends AI with a collaborative teacher-facilitated approach. There are various benefits of embedding AI in personalised learning:

Tailored learning path: Adaptive learning platforms use real-time data analytics to create individualized pathways, allowing students to focus on areas requiring improvement while progressing at their own pace. Studies have shown that students using AI-powered platforms experienced performance increases of 25%-30% compared to traditional classroom settings.

Increased engagement: By customizing content based on students' interests and preferences, AI fosters deeper engagement and motivation. A McKinsey analysis highlighted that individualised learning routes could enhance student engagement by as much as 60%.

So, AI not only augments our mind and gives us “thinking superpowers”, but it also helps us improve through personalised learning. And, yes, perhaps there may be some negative effects from relying on AI to do some of the thinking for us. But, isn’t this what happened with other technologies? Maybe. But not exactly.

🐨 The Cognitive Offloading concept

Indeed, studying the impact of technologies on our brain is not new. It is all linked to the concept of cognitive offloading. In 2016, E.F. Risko and S.J. Gilbert published a paper that defined cognitive offloading as the use of physical actions or external tools to reduce the cognitive demands of a task.

The drivers of cognitive offloading are:

our body’s attempt to save energy; significant energy (glucose) is consumed by our brains when we think deeply or multi-task, particularly when we are under stress

the limited capacity of our working memory

Offloading has various forms: i) using our body, e.g. gesturing, ii) using the external world as a repository of information, e.g. writing down information to remember it, or even iii) distribute knowledge across individuals, i.e. remembering "where" to find the information instead of “what" the information is in detail.

Overall, the benefits of cognitive offloading are improving thinking and problem solving in various dimensions, such as efficiency, speed and accuracy.

🖨️ The “printing press” effect

Using technology for cognitive offloading is something that we have been doing for centuries. The invention of the printing press in the 15th century is a great example. Critics feared written texts would erode oral memory traditions, yet literacy instead expanded collective intelligence by externalising knowledge. As a result, the technology led to i) increased literacy rates, ii) greater access to knowledge, and, as a result, iii) new forms of critical thinking and analysis through exposure to a wider range of perspectives.

More recent examples include the calculator, GPS and online search. The introduction of calculators in the 20th century enhanced computational efficiency and reduced cognitive load for complex calculations. GPS navigation helped drivers save cognitive effort and improved route optimization through real-time data integration. The Internet and online search democratised knowledge access for everyone, eliminating barriers created by information gatekeepers.

⚔️ A double-edged sword

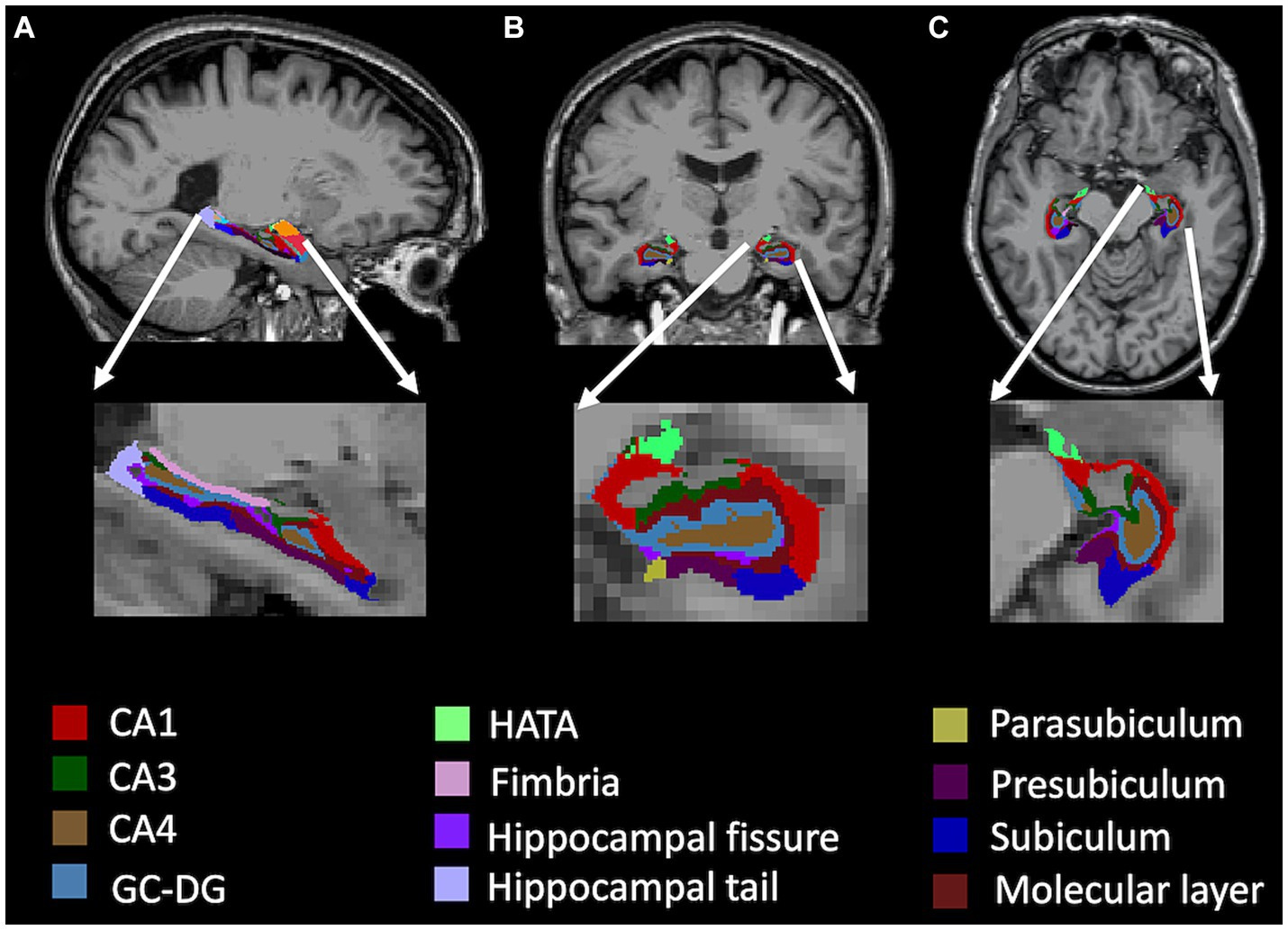

All of these cases come with their drawbacks. Calculators sparked a debate about their potential impact on mathematical skills, as learning experts expressed their concerns about their potential to hinder the development of fundamental arithmetic skills and conceptual understanding. GPS has been criticised for its proven effect of generative atrophy in the spatial memory of regular users. Similarly, functional MRI studies have indicated that Internet users process information through rapid skimming rather than sustained analysis, weakening comprehension and retention.

While we lack similar medical studies for AI, we believe this technology is different and may potentially have more serious negative effects.

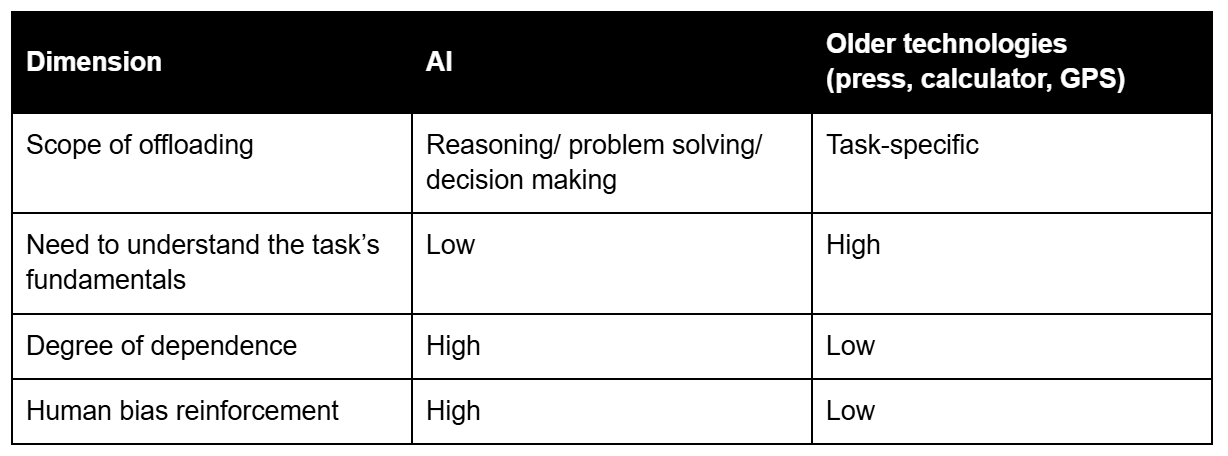

First of all, previous technologies primarily served as tools to enhance cognition while requiring human interpretation. GenAI models, which can independently produce text, code, images and decisions, have the potential to replace cognitive tasks, not just augment them. As a result, AI’s offloading scope is broader and reaches as far as reasoning, problem-solving, creativity and decision-making. This can lead to a higher degree of atrophy and dependence on AI. Second, while older technologies reduce the burden of specific tasks, they still encourage active cognitive engagement in other ways (e.g, reading enhances comprehension, calculators still require understanding of mathematical principles, etc.). In contrast, users may rely easier on AI for decisions without fully understanding the underlying task and its logic, leading to a decline in critical thinking. Third, AI evolves and adapts to the information provided by the user, which in turn may reinforce biases and prevent diverse thinking. On the other hand, calculators and GPS are static.

📕 Wrapping up

In summary, recent studies highlight the significant impact of GenAI on cognitive functions among knowledge workers, suggesting that while these tools enhance efficiency and reduce effort in critical thinking tasks, they may also diminish critical thinking and creative abilities. Key findings indicate a shift in cognitive engagement from execution to oversight, with a notable dependence on AI that varies by demographic factors. Additionally, integrating AI into cognitive processes, as discussed in theories like the extended mind and three-system thinking, suggests AI can augment human cognition in substantial ways, particularly in data-driven fields. However, the concept of cognitive offloading raises concerns about potential over-reliance on the technology, which could weaken fundamental cognitive skills.

What does this mean about the future of Tech? We expect:

an increased regulatory oversight covering standards for transparency and accountability in the design of AI systems

a significant push towards developing systems that better integrate human intelligence with AI capabilities, e.g. explainable AI systems that require human confirmation before executing tasks

the incorporation of AI literacy as a fundamental component of the curriculum at schools, covering AI tool usage, critical assessment of AI outputs, etc.

further growth in personalized learning that enhances both knowledge acquisition and critical thinking skills

an increasing focus of professional training towards building cognitive resilience, which promote skills that AI cannot replicate easily, such as complex problem-solving, ethical reasoning and creative thinking

the emergence of educational tools that help train atrophic brain areas created by cognitive offloading

To prepare ourselves for the future, we:

💳 Buy: AI compliance solutions, AI explainability tools, AI-native Edtech solutions (AI literacy, hyperpersonalised learning, cognitive “gymnastics”, etc), corporate reskilling solutions

💵 Sell: legacy software for schools, traditional academic institutions that are slow to adopt AI, traditional professional training programmes

See you next week for more AI insights.

Would you like to guest post this on my newsletter. Some phenomenal insights here