The Artificial Investor Issue #46 - February 2025 recap

My name is Aris Xenofontos and I am an investor at Seaya Ventures. This is the monthly version of the Artificial Investor that covers the top AI developments of the previous month.

The scaling laws are dead; long live the scaling laws! Deep Research: the new commodity. The OpenAI soap opera continues. Can Europe catch up? Software developer: a legacy job? Humanity’s Last Exam. All this and much more in today’s post. Let’s dive in.

🚀 Jumping on the bandwagon

We had a megaround last month with Anthropic raising 3.5 billion dollars. Other large rounds highlight investor appetite for Verticalised AI (Abrige’s 250-million and Harrison’s 110-million fundraises in Healthcare, Eudia’s 100-million fundraise in Legal Services), Automation (Tine’s 125-million round), the Infrastructure Layer (DDN’s 300-million raise in data infrastructure, Krutrim’s 230-million raise in AI Cloud infrastructure, EnCharge’s 100-million raise in chips, ElevenLab’s 180-million raise in Voice AI) and Humanoid Robotics (Apptronik raised 350 million dollars and Neura Robotics 125 million dollars) .

In terms of exits, we saw a couple of notable small acquisitions of AI players by large data and hardware companies. MongoDB acquired Voyage to enhance its AI Integration and data retrieval capabilities for 220 million dollars and LG acquired Bear Robotics to expand its robotics offering for 300 million dollars. Finally, CoreWeave, the AI datacentre infrastructure provider, announced it is filing for an IPO seeking a 35-billion-dollar valuation with the intention to go public in March’25.

📈 On pink paper

The scaling laws are dead. Long live the scaling laws! Many will remember February 2025 as the month that the launch of a Chinese reasoning model, Deepseek R1, turned the AI world upside down. The main noise was around the fact that the Chinese frontier lab (built as an internal project out of a hedge fund that had spare GPU capacity!) released a model that matched OpenAI’s top reasoning model in various benchmarks while claiming that it cost only 6 million dollars to train. This was initially compared to the billions of dollars that OpenAI has raised to train its models and the hyperscalers have invested in AI infrastructure. How can a Chinese model be 100x cheaper than OpenaAI’s model with the same quality?

The world panicked: everyone started talking about how China has caught up with the US on AI, how much money the US firms have wasted and how AI chip demand has been overestimated. Nvidia was down more than 16% on the next trading day losing 600 billion dollars of value. Of course, reason (no punt intended) prevailed as the story got broken down and analysed with calm.

Specifically:

There is a difference between buying Nvidia GPUs to use for five years and leasing them for a few weeks. This takes us from the billions OpenAI raised to hundreds of millions of dollars.

Deepseek mentioned that the 6-million-dollar training cost refers to the last (and cheapest part) of the training process of the model.

Analysts pointed out that Deepseek used its previous LLM, Deepseek-V3, to train its reasoning R1 model. This takes us to a few dozens of millions dollars for the complete training of the Chinese model.

As a result, we are really talking about Deepseek delivering 30%-50% efficiency vs. OpenAIs SOTA reasoning model that was released 8 months before its model.

Our conclusions, which we also published in the Artificial Investor Issue 44 (DeepSeek and AI myth), are:

Deepseek indeed delivered strong efficiencies. The Chinese data scientists used a couple of very innovative techniques to improve GPU utilisation, communication between model components, optimise training, etc.

The level of efficiency was broadly in line with the training efficiency curve of LLMs we have observed the last 24 months. It’s just that everyone’s focus was on language benchmarks as opposed to

China is indeed catching up with the US at the Model Layer. This was after all one of our 2025 AI predictions. You can read all of them here.

So, what happened after that? We observed three types of reactions:

On the small reasoning model side, OpenAI quickly released its o3-mini model and made it available through low-cost subscriptions to compete with Deepseek-R1.

Major players like OpenAI, Anthropic and Groq, released promptly new large-scale models:

OpenAI released GPT-4.5 with a controversial pricing model that was extremely expensive, drawing criticism.

Anthropic released Claude Sonnet 3.7

Groq made huge noise by releasing the third version of its LLM that was trained in its new AI super cluster of 200,000 GPUs. Elon Musk’s company has also faced criticism for i) being trained to censor questions about Trump and Musk, and ii) benchmarking itself against OpenAI by comparing GPT-4’s responses to questions that Groq 3 had the chance to pick the best among 62 trial responses.

There were some conflicting AI infrastructure investment announcements. Microsoft cut some of its planned investments, suggesting a possible decrease in demand for compute (driven by the efficiency highlighted by Deepseek), while Alphabet and Meta said they would grow their AI investment in the next two years.

We believe the market has in general overreacted to news looking for a reason to support that AI model scaling is over or not, depending on which side you were on. The scaling laws by definition have always had a ceiling (per dimension) and it looks like we are reaching a ceiling for the dimension of public text data and model parameters. But there are more dimensions to scale on (private text data, video data, inference, reasoning), plus we expect to observe once again the Jevons paradox: the cheaper Intelligence will get, the more demand there will be.

Deep Research: the new commodity

Another big piece of news in February was the creation of a new AI product category: Deep Research. Among hyperscalers, the pioneer was Google with its Deep Research product, which by itself is a piece of news since Google has been a follower in the GenAI wave, in terms of model and product releases. At the same time, let's not forget that Google was not the pioneer in this category - yes, I'm talking to…You.com!

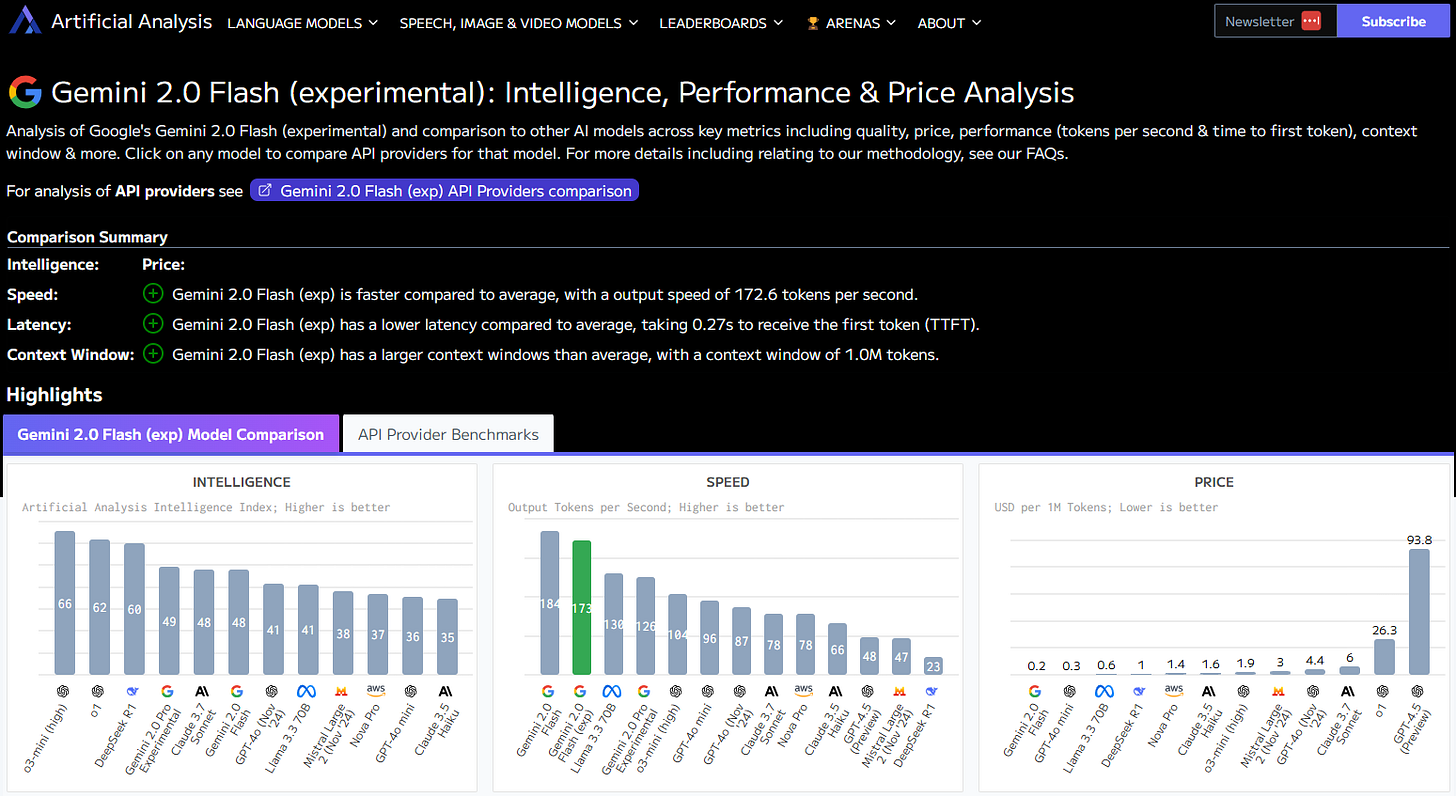

So Google launches Deep Research, which effectively uses a reasoning model, it's Gemini Flash 2.0 Thinking, and what it does is an evolution of what Perplexity has been doing. The difference is that Perplexity has been using the first wave LLMs (without reasoning capabilities) combined with Web search to give a one-shot answer using 5-10 sources to a research question. The Deep Research category type of products are going further than that.

Let's take the example of “drinking and driving regulations between the US and Europe” as a research question. The model does the following:

Comes up with a plan of what to do using a chain of thought

Goes to the Internet to look for information

Analyses that information (reviews it, does some comparisons, etc)

Synthesises the information and makes some conclusions putting together 3-4 pages

While the original version of Perplexity would look at 10-15 sources and will come up with an answer that is maximum one page, Google Deep Research looks at 100-200 sources and comes up with four pages that, in our example, would include an introduction (perhaps with an explanation of the topic and why it is important), a summary of what the differences are, a deep dive into the US (perhaps looking at each state and telling you what states are stricter vs. more tolerant) and Europe separately, and finally a conclusion (that would not only summarise the findings, but also talk about why the topic is important, what have been the effects of strict laws on alcohol consumption, etc).

Within a month, Perplexity and OpenAI launched their own products. They are all very similar, and they are actually piggybacking on something that You.com has done first a few months ago (a startup with a less-known brand and distribution power). Finally, a team published on Huggingface and GitHub an open-source version of a Deep Research product that can call any of the AI reasoning models the user prefers (the product is free but one would have to pay for the API fees of the reasoning models of course). On the back of that, OpenAI changed its Deep Research pricing model: it had started with making it available only for 200-dollar-per-month subscribers, but switched to two free uses per month for free subscribers and 10 free uses for 20-dollar-per-month subscribers, most likely in an attempt to remove some friction.

Our thoughts on this product category are:

It's worth the money if you think of the fact that you get the quality of an average university graduate doing research for three days, but you get that at a fraction of the cost (1.5 dollars vs. 300 dollars) and a fraction of the time (10 minutes vs. 3 days). We use it every week and we think you should try it too!

OpenAI is the leader in this category currently having tried all products, and it’s probably because it is using the best underlying model, which is a reasoning model fine-tuned for research.

This AI product category will rapidly get commoditised (like other thin model wrappers)

There is an important limitation: they use sources that are a mix of credible and non-credible sources. We think in the future we will have plugins for McKinsey reports, Nature publications, etc.

There is another important limitation: interpretation of the results. In our example, what the conclusions actually mean to a lawmaker in Australia who wants to introduce a new law about alcohol consumption, is far for being covered by the product.

The OpenAI soap opera continues

Another month, another new episode in the OpenAI drama. OpenAI made some noise with the first big AI Super Bowl ad, which was part of the 330 million dollars spent last year in advertising by AI companies in total. The Super Bowl ad was quite impressive and raised a lot of eyebrows.

At the same time, SoftBank is investing 40 billion dollars in OpenAI at a valuation of 240 billion dollars, leaving many of us to think about how much of an upside there still is in the investment. Softbank would have to believe that OpenAI will soon belong to the exclusive club of the 1-trillion-dollar companies, like Apple, Nvidia, Meta, Microsoft, Amazon, Tesla and Saudi Aramco! In the meantime, Elon Musk tried to make an offer to buy OpenAI and OpenAI's board declined it. This is likely part of the testosterone battle we have witnessed between Elon and Sam (Altman), which, as of recently, includes Zuck. CNBC published that Meta will release a standalone AI app to compete with ChatGPT and Sam posted on X that they should probably launch a social network app soon (being obviously sarcastic about it).

Anyway, on a more serious note, ChatGPT, the killer AI app at the moment, reached the sixth position of US mobile traffic in February. Google.com remained number one with 29% of traffic share while ChatGPT took a 2.3% share. Still a long way to go, but quite impressive to be already number six and above X.com, Reddit, and Netflix, and very close to Instagram and WhatsApp.

Also, OpenAI made a long patent application that gives a glimpse of the company’s product roadmap. There are two main topics there:

AIOps: applications related to all modalities (text, audio and video) that help people create synthetic data, process data, linking models together, etc. - effectively, the work that OpenAI’s AI engineers have been doing to take their bare models and bring them to a user app

Hardware: models and applications related to various hardware, from earphones to humanoid robots

⚔️ A double-edged sword

Can Europe catch up?

The biggest piece of news from Europe was the change in sentiment and stance on the trade-off between AI innovation and safety. Last year, the political line was safety first, but this year, as witnessed at the AI Action Summit in Paris, it is about putting innovation first. There, the French President Macron announced a 110-billion-dollar investment in AI (which is like other political announcements that put together a mix of public and private initiatives to sum up to a relatively big number), which is positive for the region albeit a fifth of the number that Trump announced for the US last January. What’s more interesting is that, from a more practical perspective, there were a couple of EU regulations that were being prepared and are getting shelved: the new ePrivacy legislation related to online advertising, and the AI Liability legislation. Instead, the 2025 EU legislative roadmap is focused on the Innovation Act and the AI Cloud and Development Act, which aim to support startups and other innovative companies, and enhance access to data.

Innovation finds a way

Another episode in the US-China AI Cold War was an announcement that Apple is partnering with Alibaba in order to integrate its AI models in iPhones launched in China (given it cannot use any of the American AI models due to export bans). This is an interesting twist, because it demonstrates once more that innovation eventually finds its way to break legal barriers and people and companies get access to the innovation they demand. Which poses the question: “What's the point of export bans and regulations on technology and AI? Do they really have any effect? And if they don't, should we be focusing our energy somewhere else?

Regardless of the effectiveness of restrictions, the two sides continue to march on the US-China AI Cold War. China has reacted to Trump’s tariff announcements by making stricter the rules around investment and M&A between Chinese and foreign Tech companies. On the other side, in reaction to the Deepseek news, US lawmakers pushed for a ban of using the Chinese application in US government-related entities, amidst security concerns around the Chinese government getting access to user data.

Software developer: a legacy job?

A new report came out about the impact of AI on employment and the sentiment seems to be shifting slowly from AI augmentation towards AI automation and replacement. This is something we expected, as AI capabilities grow. What is also special about this report is that it is practical and bottom-up. The Anthropic Economic Index is based on an analysis of the nature and volume of user prompts on the company’s models. The study showed that more than one in three jobs use some AI for at least 25% of their tasks, and that about 60% of workers use AI for augmentation whilst as high as 40% of workers use AI for automation. In addition, the analysis showed that the highest AI adoption rates are in the software engineering role, which take up about 37% of all user queries and the lowest adoption is in physical jobs, which take up 0.1% of queries. This is something we have also seen in the companies we have invested in. We carried out a survey across more than 20 companies of different sizes and sectors and business models, and the feedback was quite consistent: software development is the number one use case currently.

Talking about how software engineering jobs are changing, it was reported that the leading AI developer tool, Cursor, reached 100 million dollars of annual recurring revenue faster than any other Tech company in history: in less than two years since they were founded. Cursor's rapid growth is impressive because let’s not forget that it targets the most tech-savvy users of all: software developers.

Also, OpenAI's model used for coding performs better than 99.8% of developers in various benchmarks. This means that out of about 30 million developers worldwide, only the top 0.2%, or about 60,000 developers, match its performance. Given that Google has about 30,000 and Microsoft about 60,000 software developers, an AI developer agent should be able to get a job in one of these two companies.

We are using various AI software development tools, such as Bolt and Cursor, on a daily basis and plan to write a detailed post about these tools and their impact on software development. Here are a few spoilers:

The efficiency gains from these tools are huge and will likely change software development completely in the next two to three years

Software developers will likely not disappear; their role will change, and fewer may be needed, but they might also get paid more

The quality of code from these tools is average because they are trained on both good and bad code. Practical use requires real software development knowledge.

We'll share more details in our upcoming blog post.

In other AI-risk-related news

A recent study on the impact of Generative AI on critical thinking skills. We wrote an extensive piece in Artificial Investor Issue 45 - Does AI make us dumber? - check it out.

Thomson Reuters emerges victorious in groundbreaking AI copyright battle

🧩 Laying the groundwork

🔢 Models

The most notable model launches last month came from China. We have been giving a fair real estate to Chinese model launches in the past on this blog; hopefully, since the Deepseek-R1 launch everyone will pay a bit more attention now 🙂 Alibaba took the lion share this time around introducing new versions for its Qwen open-source model series:

Qwen 2.5-1M, the first LLM with a very large context window beyond Google’s Gemini (Gradient have also launched one but didn’t have broad use). And, it’s open source!

Qwen2.5-V, the cutting-edge Vision-Language Model with image recognition capabilities that expanded to include plants, animals, landmarks, IPs from film and TV series and products.

Qwen2.5-Max: the flagship LLM, which is a large-scale MoE model pretrained on 20 trillion tokens, post-trained with SFT and RLHF, and available through Qwen Chat and Alibaba Cloud API. The model outperforms DeepSeek V3 in benchmarks such as Arena-Hard, LiveBench, LiveCodeBench, and GPQA-Diamond.

QwQ-Max-Preview: a Large Reasoning Model competing with OpenAI’s o3 and Deepseek-R1

Wan 2.1: a video generation model that competes against OpenAI's Sora and is the first video model to support both Chinese and English text generation.

We also saw the launch of an open-source lyrics-to-song generation model by YuE. It’s fun to play with!

US BigTech companies also launched some new models. The previously-announced small-model series of Microsoft’s, Phi-4, the already-previewed Gemini-2.0 Pro of Google’s (which takes the top spot in all categories in the relevant benchmarks and connects with YouTube, Maps, and Search), as well as new models, such as Google’s open-source VLM, PaliGemma 2 Mix, NVIDIA’s Llama Nemotron models for Agentic AI development and IBM’s Granite 3.2, which is an open-source model that combines reasoning and vision. It seems like you are no one in the Tech world nowadays if you haven’t launched your own LLMs.

Other recently-released models include Mistral’s le Chat and Small 3, Allen Institute's Tülu 3 and Omnihuman’s OmniHuman-1.

In February a lot of publicity was given to a new AI model benchmark called “Humanity’s Last Exam” (HLE), which we would like to comment on briefly. The benchmark was developed collaboratively by nearly 1,000 subject-matter experts from over 500 institutions across 50 countries and aims to tackle “benchmark saturation,” wherein top LLMs get near-perfect results on existing benchmarks, such as MMLU, making it challenging to gauge genuine AI progress. HLE consists of 3,000 difficult questions drawn from subjects like mathematics, humanities and natural sciences, that go beyond easy web lookups or simple database retrievals. The format of the questions is multi-modal: about 10% of the questions require both text and image comprehension and the rest are text-based, consisting of multiple-choice and exact-answer questions. Most LLMs have scored below 10%, but recently OpenAI Deep Research broke the barrier of a 25% score. Humans score below 5%.

Below is an example question. Good luck! 🙂

🧱 Infrastructure

Deepseek what? It looks like many leading AI companies around the world don’t seem to be very fussed about the Deepseek efficient-AI news. Apple is planning to invest 500 billion dollars in expanding its AI infrastructure, including a new factory in Texas, Meta is in talks for a 200 billion-dollar data centre project, Alibaba plans to invest 52 billion dollars in AI Cloud over the next three years and AWS announced an 11 billion-dollar investment in Georgia, US for an AI datacentre.

🤖 Robotics

The year of robotics. 2025 looks like it’s going to be the (first) year of robotics. Robot dexterity is going to the next level with humanoids imitating signature movements by famous athletes, such as Cristiano Ronaldo, and robot dogs running 100 meters in less than 10 seconds. Also, it looks like Meta is also planning to jump on the humanoid bandwagon with a strategic investment in the space, managed by new leadership. The year of robotics includes autonomous vehicles. Things are heating up in the US where Lyft has partnered with Mobileye to launch robotaxis in Dallas by 2026 and May Mobility launched the first driverless commercial ridehailing service in Georgia. Chinese firms are not standing still, as BYD launched a new generation of its God's Eye autonomous driving system.

In other hardware-related news:

Amazon unveils upgraded AI assistant, Alexa+, one year after it was announced

Arm is disrupting the Semiconductor industry with the launch of its own chip, securing Meta as a major ustomer

🍽️ Fun things to impress at the dinner table

Read my mind. Meta researchers have developed a system that decodes someone’s brain activity and converts it into text. At the same time, Neuralink unveiled a similar technology that generates hand-written thoughts.

Eye contact. This Zoom user leverages AI to participate in Zoom calls making eye contact, but is actually moving around the room.

Hear me out. G. Gerganov created his own AI language that allows voice AI models talk to each other faster and with 100% reliability.